Table of Contents

Prototype platform

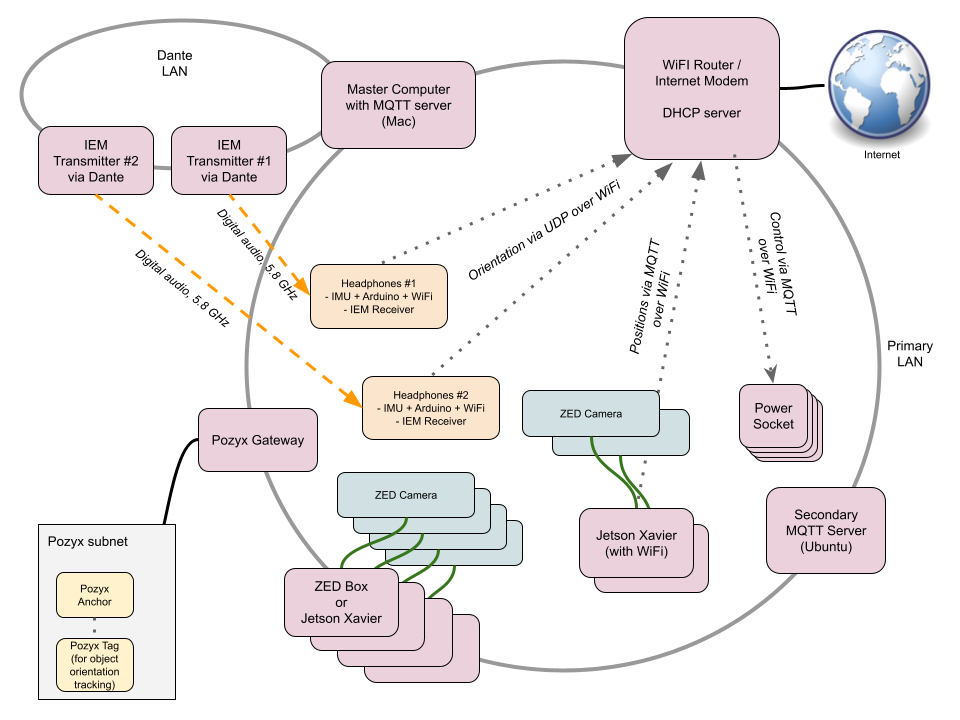

Currently, no comprehensive technical platforms are available for indoor AAR with 6DoF. While some proprietary solutions exist, they are not accessible to the public. To conduct our experiments, we developed our own prototype platform supporting multiple users in a multi-room indoor space.

In our current prototype setup, the users' positions are tracked by an array of stereo cameras with body tracking algorithms. IMU's attached to users' headphones track their head orientation. The interactive, narrative content is running on a game engine equipped with a spatial audio plugin for virtual acoustics and binaural rendering. Audio is then transmitted back to headphones by a wireless digital IEM system. A local area network (LAN) connects the different components together, although Dante devices are using their own, isolated LAN for reliablity.

We have experimented with several other tracking methods, too, namely based on UWB, BLE AoA, ultrasound, Vive Trackers, and camera-based inside-out method. For the current prototype, however, we ended up using this outside-in camera approach for its suitability for challenging and complex indoor spaces and scalability. While inside-out tracking would offer a highly mobile 6DoF solution, the systems available for us were not compact enough or performant enough for our purposes.

Master computer

In our solution, we use one master computer (MacBook Pro with M3 processor) for scene interaction and virtual audio processing for all of the simultaneous users (currently two). This ensures a relatively low system latency with enough processor capacity for good-enough virtual audio processing. Additionally, the users do not need to carry a mobile device so they are wearing only a pair of custom made headphones.

The computer is running

- the project application (made with Unity), one instance per user

- audio router software

- Dante virtual audio driver

- MQTT server

Mac was chosen due to its lower audio latency compared to Windows. That is at least when running a Unity build based on our tests. Using a Linux or Windows based system is not out of the equation, but compatibility with software and plugins must be first checked, and low enough audio latency confirmed.

With our recent switch from an Intel Mac to the new one with an Apple silicon processor, there were some compatibility issues with software and plugins.

Project application

A Unity build, which is the central piece of software in our setup, containing

- sound sources

- scripted interaction (using C# and Visual Scripting)

- 3D model of the venue (for conveniently placing sound sources and visual debugging)

- virtual acoustic simulation (using general reverberation models by dearVR with rough real-time auralisation based on distance to walls)

- binaural decoder (included in dearVR)

- interfaces with the positional tracking systems (using UDP and MQTT protocols)

Dante virtual audio driver

Feeds audio to wireless IEM transmitters via local area network (LAN).

Using Dante to feed audio to the IEM transmitters via existing LAN makes the setup easy since no physical audio interface and extra cable runs are needed. When scaling up the system with multiple users and transmitters, Dante makes configuration and setup potentially very convenient, although that is still to be verified.

The virtual Dante introduces some latency (4 to 10 ms), which could be eliminated by using a hardware Dante interface. However, we've encountered serious compatibility issues with RME audio interfaces and the dearVR plugin on Apple silicon. Therefore, we're proceeding cautiously to avoid spending money on hardware that might not work.

Headphones

Open-back headphones (currently Sennheiser HD 650) equipped with

- Supperware head-tracker using IMUs, connected via UART to Arduino

- Arduino Nano 33 IoT board with a WiFI connection to LAN

- MIPRO MI-58R digital IEM receiver

- Lithium Polymer battery with a charger/booster chip

- ArUco markers for optical identification and IMU calibration

To calibrate against drift typical to IMUs, the system uses the ZED cameras to keep looking at the orientation of ArUco markers on the headphones, and calibrates the IMU readings if needed.

The headphones setup is still at a prototype stage. Changes for the upcoming versions:

- lower impedance headphones to better match with the IEM receiver

- better component placing and attachment for optimal weight distribution

- neat cabling

- casing

Further, tests will be conducted replacing the headphones (HD 650) with a pair of 'Mushrooms', acoustically transparent 3D-printable headphones by Alexander Mülleder1).

ZED camera system

Camera tracking computers

NVIDIA Jetson Xavier computers with Ubuntu interpreting camera signal from Stereolabs ZED stereo/depth cameras with

- body tracking algorithm

- ArUco marker algorithm for identifying users and calibrating when the IMUs drift

- contour detection to know whether curtains on windows are open or closed

The computers send the body joint coordinates of all visible persons with IDs based on ArUco markers to the MQTT server. Some computers connected to LAN via WiFI for easier installation.

ZED stereo cameras

Multiple ZED stereo cameras from Stereolabs

-

Pozyx Gateway

We use the Pozyx's UWB system for tracking the orientation of some individual narrative objects such as a picture frame and a door.

We first tried to use UWB for location tracking with the Pozyx system, but we never managed to get reliable readings in our venue, possibly due to electro-magnetic reflections or some other reason. However, we kept the Pozyx as a part of the system since the Pozyx tags are working very nicely as orientation trackers, and we may still be using the location tracking features for some limited area.

Primary LAN

Connects most of the devices together, either by cable or wirelessly, including

- main computer

- MQTT broker

- Jetson Xavier computers w/ ZED stereo cameras

- headphone orientation tracker (Arduino Nano with IMU)

- WiFi router + connection to the Internet

- Pozyx gateway

- remote-controlled mains sockets

Dante LAN

- digital IEM transmitters w/ Dante interface